Aarif Nakhooda is the CFO of CoreAI and Client Success at San Francisco, California-based Keystone.ai, a global technology and advisory firm for large enterprises, law firms and governments. Views are the author’s own.

In a previous article, I argued that traditional ROI is an imperfect — and often misleading — tool for evaluating artificial intelligence investments.

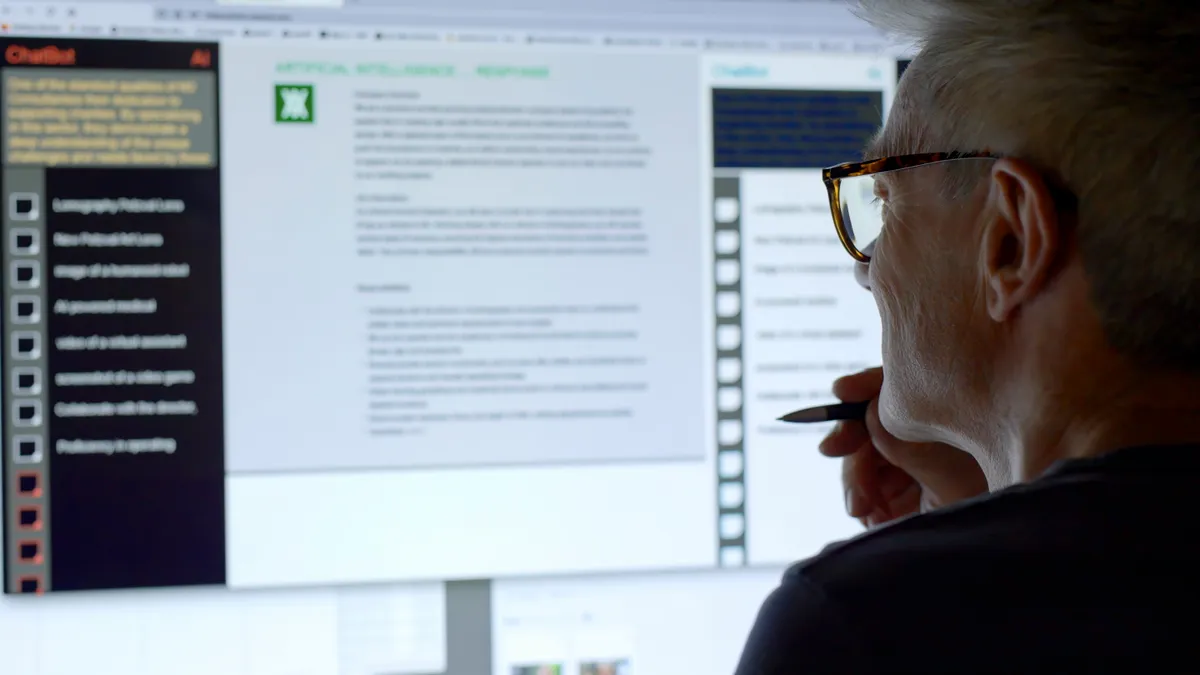

AI doesn’t deliver value in the tidy, linear way we expect from capital projects or targeted marketing spend. Instead, it moves micro-levers across complex systems, producing both immediate and downstream effects that are hard to isolate, quantify or time-box.

So, if ROI falls short, the natural question is: What should CFOs use instead?

My answer is simple: use AI to measure AI. If AI systems are too complex and dynamic to be assessed with static financial models, then the solution is to apply intelligent, adaptive measurement tools such as digital twins and downstream impact analysis.

Digital twins

Imagine trying to understand whether a single marketing nudge — an email, a promotion, or a product recommendation — caused a customer to convert. Traditional A/B testing gives you a rough comparison: one group receives the nudge, one does not. But what if testing isn’t feasible, ethical, or scalable? In industries like healthcare, we can’t deny one group a life-saving treatment. In marketing, turning off campaigns for half your customer base is expensive and impractical.

This is where digital twins come in. A digital twin is a simulated version of a real person or event, created using AI and historical data. It allows you to run experiments without real-world disruption. One version of the “customer” gets exposed to the treatment (say, streaming a video during a free trial), while the twin does not. By comparing the outcomes — conversion rates, revenue, long-term engagement — we isolate the true effect of the action.

Unlike A/B testing, digital twins scale across thousands of variables, touchpoints and customer segments. They allow CFOs to trace not only direct impacts, but long-term effects: how small interventions ripple forward through customer behavior and business results.

Many CFOs struggle with the intangibles of AI — things like customer engagement, content consumption or speed of decision-making. These behaviors feel positive, but how do we assign them dollar value?

Digital twins help solve this. Suppose a twin streams a piece of content during a free trial, while the original does not. Twelve months later, the twin has spent more, remained subscribed longer, or referred others. We’ve now traced a causal, financial link between what was once an intangible behavior and measurable business outcomes.

This turns what CFOs might dismiss as “soft metrics” into plan-worthy inputs — forecastable, trackable and attributable.

Downstream impact analysis

AI actions often lead to compounding effects over time. A customer influenced by a discount today might not just buy once; they might change their behavior entirely: reorder sooner, upgrade later or convert others.

Downstream impact simulation allows us to simulate those long-term, evolving journeys using digital twins. We can observe how a single input — like watching a tutorial video, clicking an ad, or receiving a shipping offer — affects not just one transaction but the customer’s lifetime value.

This kind of analysis is critical for CFOs, who need to justify not just short-term returns, but the full arc of value creation AI can unlock.

Entitlement analysis

Sometimes, the right way to evaluate AI isn’t to compare treatment and control but to ask: how close are we to the ideal outcome?

In entitlement analysis, we define a theoretical “perfect” scenario — one where every decision is optimized for cost, timing or customer experience — and compare it to real-world performance. For example, in logistics, a perfect system might route every shipment from the closest warehouse at the lowest cost. That’s likely unachievable in practice, but it gives us a benchmark. If your algorithms close a $2 billion gap between current state and perfection, you’ve created tangible value.

This technique doesn’t require AI to implement, but it’s an excellent way to evaluate whether your AI is improving performance over time — especially in operations, fulfillment or supply chain use cases.

From measurement to action

Once CFOs can isolate and quantify the value of intangible inputs, the next step is planning. Finance teams can take these insights and build causal models tied to specific events.

Want to improve trial-to-paid conversion? Identify what drives it — video engagement, early usage, time to first value — and plan to increase those behaviors. Then forecast revenue based on the expected response rates observed in your synthetic control models.

This turns AI from an experimental tool into an engine of deliberate financial planning. You know how many nudges you want to deliver, what return they should drive, and whether you’re on track — all while building stronger links between marketing, product and finance.

Editor’s note: This article is the second in a two-part series. Part one explained why traditional ROI tools are not ideal for evaluating AI investments.