As more companies roll out generative AI-driven products and services, uncertainty around pricing for the AI chips that underpin the technology will likely be top of mind for CFOs.

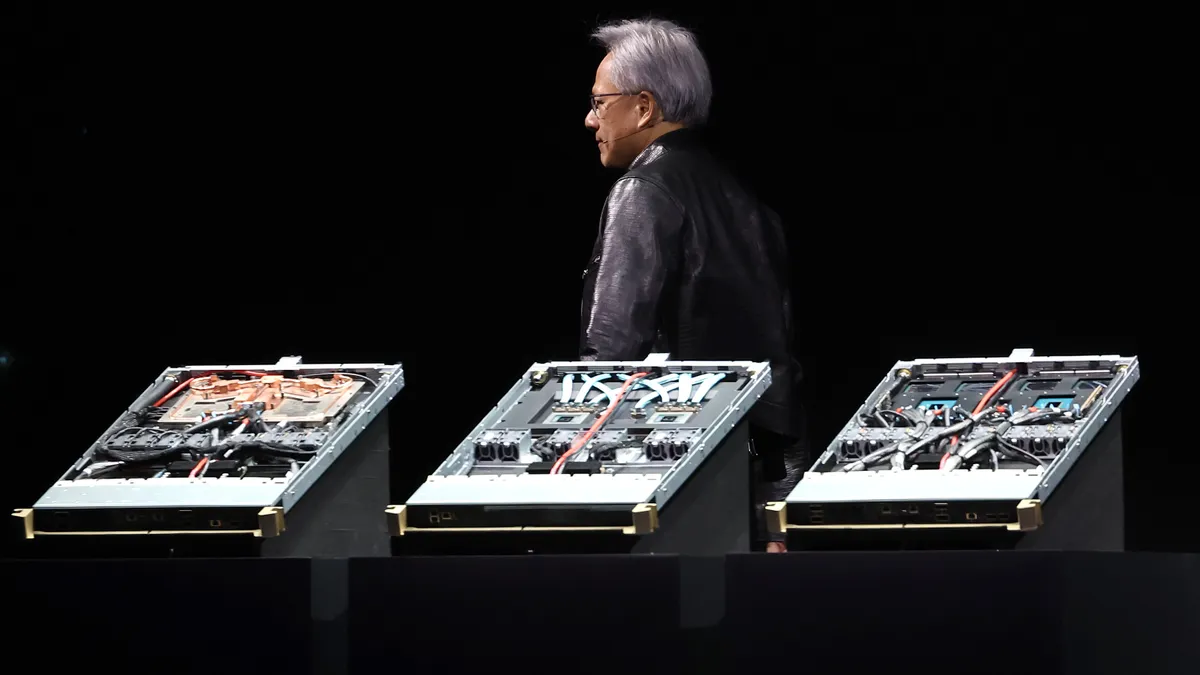

Many are eying the pricing by chip maker Nvidia, which commands an estimated 80% of the market share for AI chips, according to The Wall Street Journal, though Amazon, Google Meta and Microsoft are working to build their own AI chips. Nvidia typically doesn’t disclose its pricing publicly, but comments from CEO Jensen Huang around the company’s annual GTC developer conference last week are putting a focus on the costs of AI advancements.

Blackwell, Nvidia’s next-generation graphics processing unit announced at its developer conference in San Jose, California last week, will ship later this year. It combines two chips; it’s 30 times faster than the current generation for large language model inference workloads while also reducing cost and energy consumption, according to the company.

A graphics processing unit is a type of computer chip that renders graphics and images. GPUs can carry out many mathematical calculations at the same time, and are seen as critical for artificial intelligence systems.

“Generative AI, as you know, requires a lot of flops… an insane amount of computation,” Huang said at the conference. “It can now be done cost effectively [now that] that consumers can use this incredible service called ChatGPT.”

Pricing considerations

Huang told CNBC last week that the cost of Nvidia’s Blackwell processor will hover around $30,000 to $40,000 per unit. That compares to current generation H100 chips that range in price from $25,000 to $40,000 per chip, CNBC reported, citing industry estimates.

At GTC, however, Huang walked back the chip price estimates he gave CNBC, noting that price points for chips and enabling tools are part of a larger, overarching platform cost.

“The Blackwell system includes NVLink [connectivity technology], and so the partitioning is very, very different… we will have pricing for all of them,” Huang said. “The pricing … will always as usual come from total cost of ownership.”

A spokesperson for Nvidia declined to comment when asked for more details about chip pricing.

Michael Fauscette, CEO and founder of Arion Research, said most companies rolling out AI won’t be paying Nvidia directly for the GPUs. Instead, they would access them as part of a subscriber package from a third-party enterprise technology provider. If prices go up, the costs could be passed along to customers, but savings and efficiency gains would likely offset the higher prices.

Third-party cloud vendors offering access to Nvidia AI chips include Amazon Web Services, Oracle, Microsoft and Google, according to Holger Mueller, vice president and principal analyst at Constellation Research.

When offered through cloud service providers, chip costs “may go down… faster platforms mean that more workloads can run… so cloud vendors can run more workloads on the same machine,” he said. However, companies that need to procure chips from Nvidia may face higher costs, he noted.

Regardless of the pricing outcome, when it comes to AI, the question is not if but when companies move forward, Fauscette suggested.

“That ship has sailed,” he said. “CFOs are worried about cost…but at the same time, the more it becomes a strategic advantage, a competitive advantage for the business — the more they're going to have to invest in it.”